From Whispers to Ubiquity: The Evolution of Voice-Controlled Devices

Chosen theme: The Evolution of Voice-Controlled Devices. Join us on a human-centered journey from early lab curiosities to everyday companions, blending facts, lived moments, and future horizons. Subscribe and share your earliest voice-command memory to help shape tomorrow’s conversations.

Origins: Dreams, Demos, and First Words

In 1952, Bell Labs’ Audrey recognized spoken digits, and a decade later IBM’s Shoebox handled numbers and basic commands. These clunky demos felt magical, hinting that machines might someday listen, understand, and help—long before anyone imagined wake words or streaming music in the living room.

Origins: Dreams, Demos, and First Words

Star Trek’s conversational computer and HAL 9000 from 2001: A Space Odyssey inspired generations of engineers to chase natural dialogue. Cultural imagination mattered: fiction set expectations, nudging researchers to shrink error rates and bring friendly, helpful voices out of the screen and into our daily routines.

Recognition Breakthroughs: How Machines Learned to Listen

From HMMs to Language Models

Hidden Markov Models once dominated speech recognition, stitched together with n‑gram language models. This statistical foundation reduced guesswork on phonemes and words, gradually lowering word error rates. It was painstaking progress, yet it opened the door to larger datasets and more ambitious, conversational applications.

Deep Learning and End‑to‑End Systems

Neural networks, then LSTMs, CNNs, and finally Transformers catalyzed steep error-rate drops. End‑to‑end models learned features directly from audio, taming accents and noise. Suddenly, assistants could understand complex commands without rigid phrasing, making conversation feel less like programming and more like speaking to a helpful companion.

Siri Opens the Door

A 2011 launch turned mainstream attention to voice, bundling speech, search, and services in people’s pockets. Suddenly, hands‑free tasks—texts, reminders, directions—felt natural on the go. That pivotal moment reframed voice not as a tech demo, but as a daily utility worth refining and trusting.

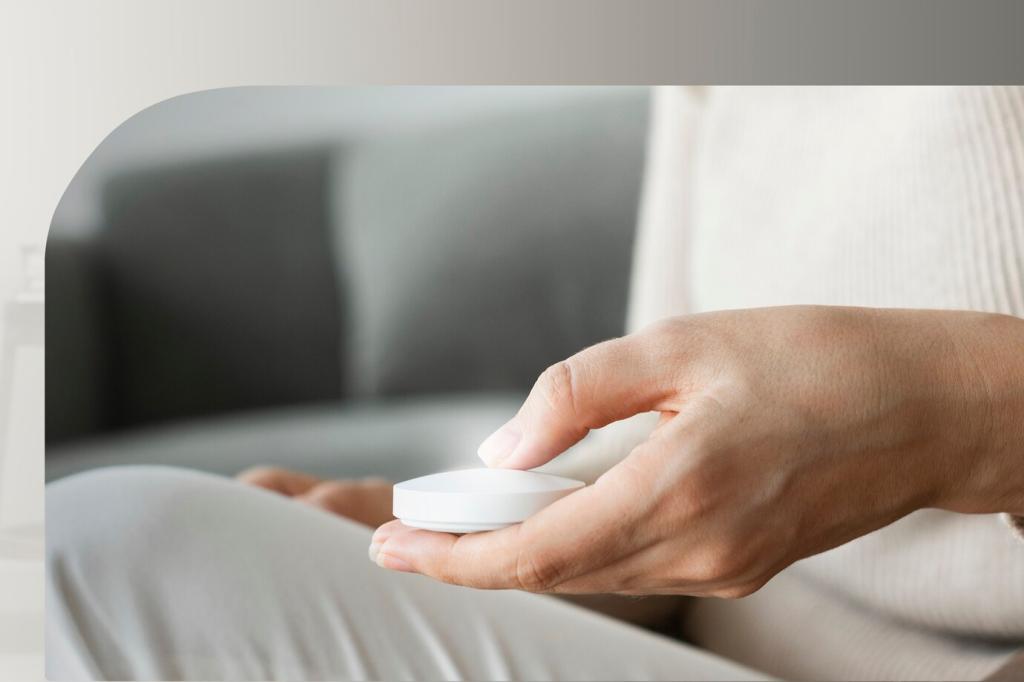

Always‑On at Home

Smart speakers brought hands‑free help to the countertop: timers, music, shopping lists, and quick answers. Families began settling trivia debates mid‑dinner. Kids asked for dinosaur facts. The living room became a proving ground for frictionless interaction, nudging ecosystems to add routines, skills, and multi‑device handoffs.

How Do You Use Yours?

What’s your most-used voice routine—bedtime lights, morning headlines, or navigation on commutes? Tell us how you rely on voice today, and subscribe to get practical tips, hidden features, and reader-tested routines that make assistants feel smarter without adding complexity or clutter to your life.

Intent, Context, and Repair

Great assistants infer your intent, remember context, and gracefully repair misunderstandings. Confirmation prompts, follow‑ups, and clarifying questions turn errors into collaboration. Designers iterate scripts like playwrights, refining turns until conversation flows—useful, concise, and surprisingly human without veering into uncanny territory or frustrating repetition.

Tone, Personality, and Trust

A voice’s warmth, pacing, and word choice influence adoption. Friendly yet efficient personas reduce cognitive load and feel respectful of time. Transparency builds trust: explaining actions, citing sources, and acknowledging limits. Share which assistant voice you prefer and why; your feedback guides our ongoing voice UX experiments.

Teach Us Your Flows

Do you design for voice or build routines at home? Post your favorite multi‑step flow—like dim lights, start playlist, and read headlines—and subscribe for a monthly teardown where we analyze reader submissions, suggest improvements, and publish templates you can adapt across ecosystems without headaches.

From Cloud to Edge

On‑device models reduce latency, enable offline features, and keep more audio local. Smart chips now handle wake words, noise suppression, and even transcription. This architectural shift helps protect privacy while making assistants faster, especially in cars, elevators, and rural areas with spotty connections.

Consent, Controls, and Clarity

Clear mic indicators, easy deletion of recordings, and granular permissions are essential. People deserve to know what is stored, when it is processed, and why. Tell us which privacy controls you use most, and subscribe for our upcoming checklist on auditing voice settings across platforms.

Ethical Guardrails in Everyday Use

Bias in recognition can exclude voices; minimizing it requires diverse training data and robust evaluation. We favor human‑review transparency, kids’ privacy protections, and accessible opt‑outs. Share your concerns and feature wishes—we compile reader insights into an open, evolving framework for ethical voice design.

What’s Next: Multimodal, Ambient, and Personal

Voice shines alongside visuals, touch, and glance. Imagine saying “zoom in,” while your gaze anchors the map, or dictating notes that appear contextually grouped on a watch. Share your dream interaction, and subscribe for prototypes exploring voice plus vision without overwhelming users or hiding essential choices.

What’s Next: Multimodal, Ambient, and Personal

Assistants will anticipate needs with richer on‑device context: suggesting umbrellas before storms or routing around traffic automatically. Guardrails matter—clear opt‑ins, explanations, and quick overrides. Tell us where proactive help feels welcome versus intrusive; your insights will shape our principles for responsible ambient intelligence.